High-End Chip G70: Only shimmering AF?

August 13, 2005 / by aths / Page 1 of 2

This is an slightly extended version from our original article in german language. We like to thank some users from the 3DCenter Forum for their support to translate the original article: Gotteshand, moeb1us, Nicky, tEd and especially huha.

With the launch of the GeForce 7800 GTX, Nvidia showed that the strong performance of its former SM3 flagship, GeForce 6800 Ultra, may still be topped. Beneath more and improved pipelines, the G70 offers higher clock speeds. Useful new anti-aliasing modes were added as well.

In terms of the level of texture quality, however, Nvidia seems to evaluate the demands of its high-end customers as fairly low. The new high-end chip G70 produces texture shimmering with activated anisotropic filtering (AF). Just as a reminder: This applies to the NV40 (GF 6800 series) in standard driver settings as well, but this can be remedied by activating the "High Quality" mode.

For the G70 chip, the activation of "High Quality" does not provides the desired effect - the card still shows texture shimmering. Was Nvidia able to accustom the consumer to texture shimmering with the NV40 standard setting already, so that their next chip, the G70, does no longer offer options to produce shimmer-free AF?

The anisotropic filter should improve the quality of textures–but on your new card for 500 bucks, the result is texture shimmering even in the High Quality mode. One can hardly consider this a real "qualitiy improvement". Good quality naturally does have an impact on the rendering speed, however, "performance", meaning "power", is "the amount of work W done per unit of time t". "Performance", meaning "appearance" or "acting" includes the quality of it. That is why the actual "performance" does not increase with such "optimized" AF.

Someone spending some 100 bucks on a new card problably doesn't want to play without anisotropic filtering. One should also know that the 16x AF mode in the G70 chip, as well as the NV40 chip, renders some areas with 2x AF at maximum, even though consistent 4x+ maximum AF would be far more helpful to produce detailled textures for the entire image. 16x AF naturally doesn't mean to have every texture sampled with 16x AF for the entire image, it depends on the degree of distortion of the resampled texture. But for some angles, even a 16:1-distortion gets only 2x AF, resulting in blurry textures there.

That was probably acceptable at times of the R300 (Radeon 9500/9700 series), today this is of course outdated (sadly, the R420-Chip suffers this restriction, too. In fact, since NV40 Nvidia mimicked ATIs already poor AF pattern). For SM3 products like the NV40 or G70, we don't understand such trade-offs regarding AF quality.

Right at the launch of the GeForce 7800 GTX, only one site in the entire net described the problem of shimmering textures: The article on Hardware.fr by Damien Triolet and Marc Prieur. 3DCenter only offered benchmarks done in "Quality" mode (by carelessness, we believed what Nvidia wrote in their Reviewer's Guide), and described technical aspects like the improvements in the pixel shader. But as long as the G70's texture problem exists, we do not see any reason to publish more about the shader hardware: First of all, the implementation of multi-texturing has to be satisfactory, then we could write about other stuff, too.

In older news we stated the cause for the G70's AF-shimmering would be undersampling. We have to owe Demirug's investigtions our current knowledge of the matter being more complicated than initially thought. The necessary texel data is actually read from the cache, all required texels are read, but they are combined in a wrong way. Nvidia's attempt to produce the same quality with less work was a good idea, but unfortunately they failed here. This could be a hardware bug or a driver error.

After all, it does not matter: The G70, delivering a texel power of more than 10 Gigatexels, but using 2x AF for some angles only–while the user enabled a mode called "16xAF"; plus the tendency to have textures which tend to shimmer, is an evidence of the G70's incapacity. We also have to criticize Nvidias statement in the Review Guidelines, which reads (we also quote the bold typing):

|

"Quality" mode offers users the highest image quality while still delivering exceptional performance. We recommend all benchmarking be done in this mode. |

This setting results in shimmering on the NV40 and on G70, but Nvidia called this "the highest image quality". An image which claims to show "quality" (even though without "high") must of course do not tend to texture shimmering – but it does on Nvidia's SM3 product line. For High Quality, Nvidia writes:

|

"High Quality" mode is designed to give discriminating users images that do not take advantage of the programmable nature of the texture filtering hardware, and is overkill for everyday gaming. Image quality will be virtually indistinguishable from "Quality" mode, however overall performance will be reduced. Most competitive solutions do not allow this level of control. Quantitative image quality analysis demonstrates that the NVIDIA "Quality" setting produces superior image fidelity to competitive solutions therefore "High Quality" mode is not recommended for benchmarking. |

However, we also cannot confirm this: The anisotropic filter of the G70 chips does also show shimmering textures under "High Quality", the current Radeon cards do not flicker even with "A. I." optimizations enabled. We are really interested in to know more about how Nvidias "quantitative image quality analysis" examines the image quality.

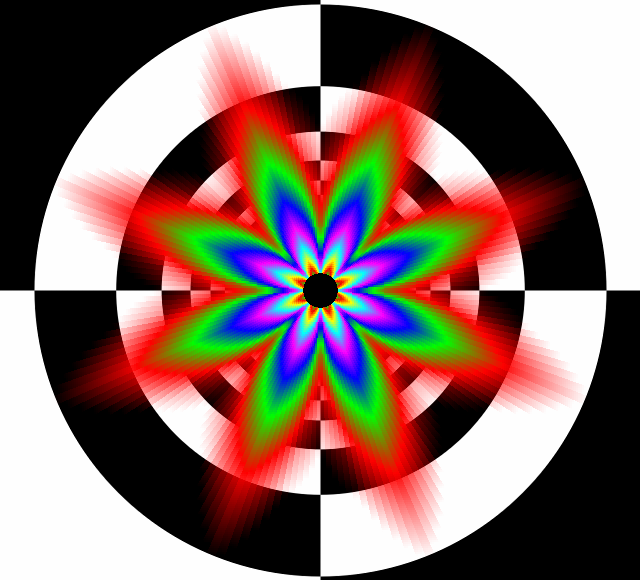

MouseOver 16xAF à la GeForce 6/7 vs. 8xAF à la GeForce 3/4/FX

The image shows a tunnel built of 200 segments (causing the "fan out" effect at the border of the picture). Each color represents a new MIP level. The nearer to the center the new color begins, the more detailed (i.e. with higher AF levels) the texture is. At several angles like e.g. 22.5°, the MIPs on Geforce 6/7 start very early (near the border), because only 2x AF is provided for these angles. The Geforce 3/4/FX has an angle-"weakness" at 45° but shows a far more detailed image already at 8x AF–due to the fact that the colored MIPs appear later, more in center.

Essentially, the GeForce 3/4/FX offers the enabled 8x AF for most of the image, while just a few parts are treated with 4x AF. In contrary, the GeForce 6/7 just offers the enabled 16x AF for minor parts of the image, only the 90° and 45° angles are really getting 16x AF. Most of the other angles are being filtered far less detailed than the actually enabled 16xAF, though. Large areas of the image are just filtered with 2x and 4x AF, the overall quality improvement is not as good as 8x AF provided on the GeForce 3/4/FX.

The overal quality improvement of the NV40's and G70's so-called 16x AF is, in fact, much less compared to 8x AF on the GeForce3-FX series. ATI's AF also does not reach the traditional GeForce quality, but at least ATI improved the capabilities of their AF with each real new chip generation (R420 is a beefed-up R300 and no new generation), while Nvidia lowered the hardware capabilities for their AF implementation compared to their own previous generation. The Reviewer's Guide is silent about that fact, it only highlights the possibility of 16x since NV40, making the Reviewer think this must be better than 8x provided by older GeForce series.

The angle-dependency of the GeForce 6/7's AF adds an extra "sharpness turbulence" to the image: Imagine the case of very well filtered textures (with 16x AF) being close to only weakly filtered ones (with 2x AF), which even catches the untrained eye unpleasingly. The geometrical environment of "World of WarCraft" is a good example to see such artifacts (on both any Radeon and GeForce since NV40). Shouldn't a SM3 chip be able to do better, at least be able to provide the texture quality of 2001's GeForce3?

A very imporant note: This AF tunnel does not allow any conclusion regarding underfiltering, it only shows at what place which MIP level is used. Normally, you should be able to tell which particular AF level is used by comparing the pattern with a LOD-biased pattern. But in the case of undersampling, there are fewer texels sampled than actually required. For instance, undersampled 8x AF is no true 8x AF. The right MIP map is chosen indeed, but the wrong amound of texels are used. As already stated, this tunnel only shows which MIP level is used and where, not whether the AF is implemented correctly.

|

|